Abstract

Global illumination (GI) is essential for realistic rendering but remains computationally expensive due to the complexity of simulating indirect light transport. Recent neural methods for GI have focused on per-scene optimization with extensions to handle variations such as dynamic camera views or geometries. Meanwhile, cross-scene generalization efforts have largely remained confined in 2D screen space—such as neural denoising or G-buffer–based GI prediction—which often suffer from view inconsistency and limited spatial awareness.

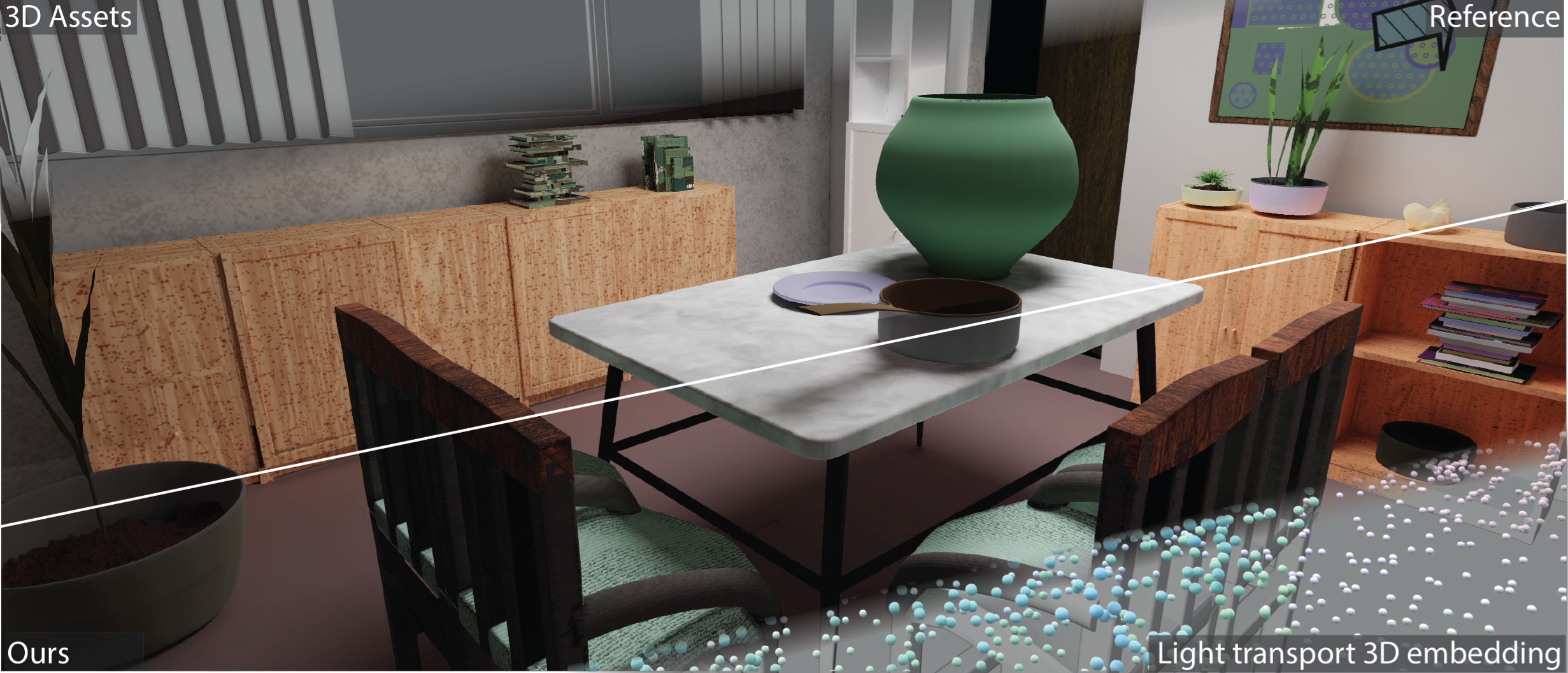

In this paper, we learn a generalizable 3D light transport embedding that directly approximates global illumination from 3D scene configurations, without utilizing rasterized or path-traced illumination cues. We represent each scene as a point cloud with features and encode its geometric and material interactions into neural primitives by simulating global point-to-point long-range interactions with a scalable transformer. At render time, each query point retrieves only local primitives via nearest-neighbor search and adaptively aggregates their latent features through cross-attention to predict the desired rendering quantity.

We demonstrate results for estimating diffuse global illumination on a large indoor-scene dataset, generalizing across varying floor plans, geometry, textures, and local area lights.

We train the embedding on irradiance prediction and demonstrate that the model can be quickly re-targeted to new rendering tasks with limited fine-tuning. We present preliminary results on spatial-directional incoming radiance field estimation to handle glossy materials, and use the normalized radiance field to jump-start path guiding for unbiased path tracing.

These applications point toward a new pathway for integrating learned priors into rendering pipelines, demonstrating the capability of predicting complex global illumination in general scenes without explicit ray traced illumination cues.